projects

advanced computer vision, signal processing, and deep learning solutions across academic research and industry applications

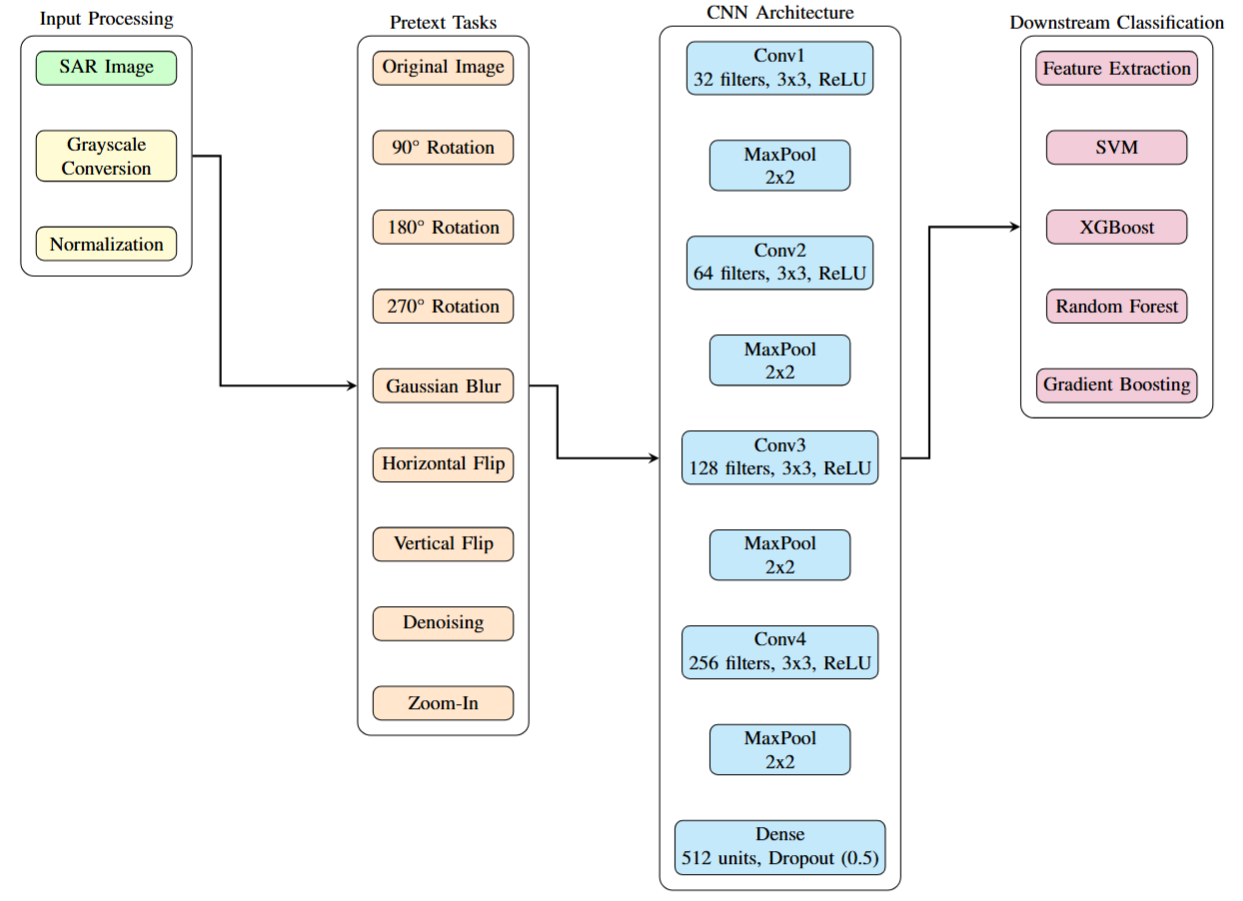

Self-Supervised Learning for SAR Target Recognition with Multi-Task Pretext Training

We developed a self-supervised learning framework for Synthetic Aperture Radar (SAR) Automatic Target Recognition that eliminates dependency on synthetic data while achieving superior performance. Our framework utilizes multi-task pretext training with nine complementary transformation tasks to develop robust feature representations from measured SAR data. The experimental findings demonstrate competitive performance with 89.78% accuracy using SVM classifier and robust detection capabilities even with limited training data, outperforming traditional methods that rely on synthetic data augmentation. This work establishes a foundation for leveraging self-supervised learning in domain-specific applications with limited labeled data.

Automated Range of Motion (ROM) Measurement using Human Pose Estimation

We developed an automated Range of Motion (ROM) measurement web/mobile application for patient and healthcare providers with real-time monitoring and scalable deployment. The platform integrates over 200 active and passive ROM exercises, utilizing full-body pose estimation models to accurately analyze patient videos. Moreover, to boost model precision, we fine-tuned exercise-specific pose estimation models using custom in-house datasets. For scalability, we designed a distributed system with a master-slave architecture to efficiently manage high-volume API requests. We also leveraged Amazon EC2 and S3 to ensure a robust, cloud-based infrastructure, supporting seamless ROM assessments in both telehealth and clinical environments.

Abnormal Gait Analysis using Video-based Signal Processing

This project focuses on the development of an advanced gait event and abnormality detection system using pose estimation techniques. The system incorporates a signal-based approach to improve the accuracy of gait event detection, outperforming traditional angle-based methods, resulted in a patented solution. The project involved analyzing a diverse range of abnormal gait patterns, including Antalgic, Ataxic, Hemiplegic, Parkinsonian, and Trendelenburg gaits, using an in-house database of human participants. Collaborating closely with MyMedicalHUB's (MMH) clinical team of physicians and physical therapists, the project ensured clinical relevance and accuracy.

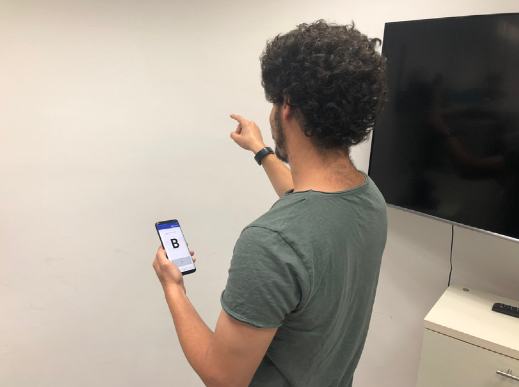

Deep Learning Based Air-Writing Recognition with the Choice of Proper Interpolation Technique

We developed a deep learning-based air-writing recognition system that addresses the critical challenge of variable signal length in time-series data through innovative interpolation techniques. Our method extensively investigated different interpolation techniques on seven publicly available air-writing datasets and developed a method to recognize air-written characters using a 2D-CNN model. In both user-dependent and user-independent principles, our method outperformed all the state-of-the-art methods by a clear margin for all datasets, achieving up to 100% accuracy on digit recognition and significant improvements across all character recognition tasks.